Real-Time Defense: The Power of Data In Transit Classification

March 6, 2024

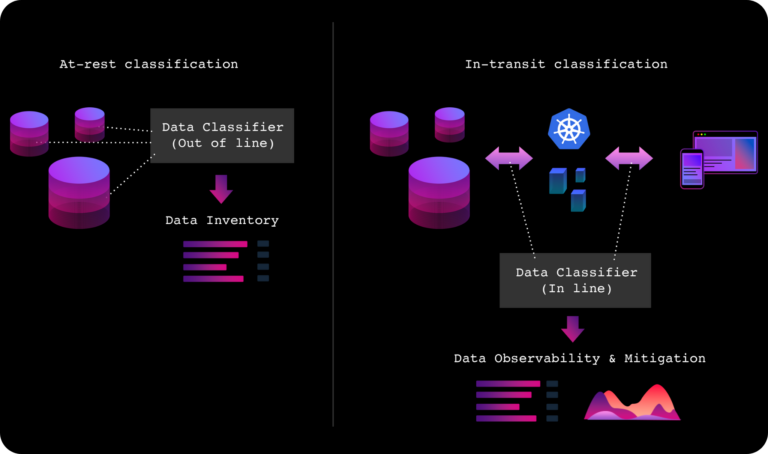

In the ever-evolving landscape of modern architectures, ensuring robust data security has become paramount. It’s no longer sufficient to merely scan data at rest; a foundational shift towards data in transit classification is being driven by modern AI-enabled workloads and multi-cloud environments hosting tens of thousands of ephemeral microservices. In-transit classification is imperative to observe data access, mitigate threats, and uphold regulatory compliance within production environments.

While existing solutions adeptly identify sensitive data within storage areas, understanding data at rest represents only a portion of the challenge. The critical aspect lies in the real-time classification of sensitive data types within live traffic, serving as the foundational piece in the defensive cybersecurity puzzle, allowing you to confidently answer the crucial questions: who is accessing sensitive data? Was it an inadvertent error or a malicious threat actor? How do I enforce data sovereignty and protect cross-border data flows?

Reimagining Data Security in Modern Architectures

Traditionally, security teams have relied on inline network security products at various layers like the ingress, API Gateway, or content delivery network (CDN) to safeguard infrastructure and segment critical networks. However, this approach falters within the service mesh, where traditional methods like IP address blocking and signature-based detection on request traffic prove architecturally incompatible and inadequate in detecting exfiltration. With the emergence of hybrid, multi-cloud, and Kubernetes-based microservices over the past decade, a significant security gap has emerged, particularly concerning data in transit.

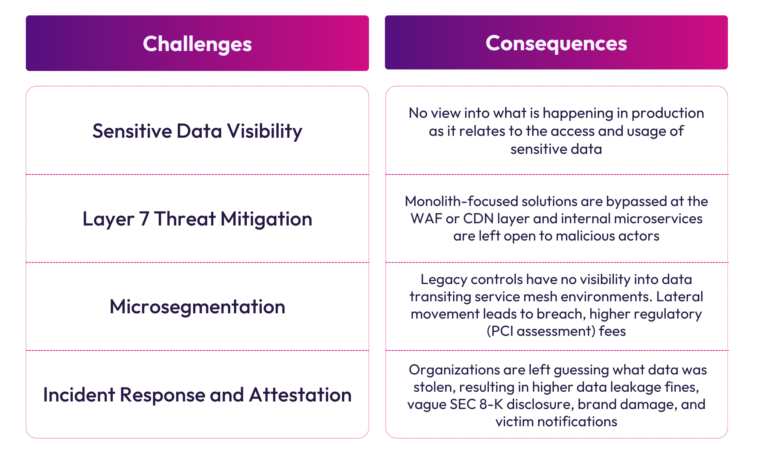

The complexity of safeguarding data in transit is magnified by the dynamic nature of modern architectures. Regulated organizations face escalating costs and risks associated with accidental data leaks to the public-facing internet or internal systems. Addressing these challenges necessitates real-time data in transit classification, monitoring data flows to ensure the safety of sensitive information. The process must unfold in real-time within live production traffic all while maintaining scalability, performance, zero false positives, and eliminating duplication of sensitive data during analysis. If not executed properly, many challenges arise:

Sensitive Data Visibility:

At the crux of effective security lies service-level data visibility. If we can’t see the type and amount of sensitive data exiting a service through web, log or database traffic, then we can’t detect when bad things happen. The ability to discern critical data emission signals amidst ordinary traffic empowers practitioners to detect abuse and unknown data leakage, providing invaluable insights into application behavior in production.

Layer 7 Threat Mitigation:

Sensitive data visibility holds little value without the means to mitigate security incidents. In-transit data classification offers organizations a unique perspective on data access by authenticated digital identities, enabling proactive identification and blocking of abusive behavior. By analyzing traffic patterns and correlating data access with authentication tokens, organizations can effectively manage and attest to each identity’s access to sensitive data.

Microsegmentation:

Microsegmentation, widely required by regulatory bodies like PCI DSS and NIST, serves as a pivotal practice in modern data security. Proper implementation prevents lateral movement in the event of a breach and can drastically reduce regulatory assessment fees. However, maintaining effective microsegmentation requires ongoing monitoring and adjustment, which traditional policy-based approaches struggle to achieve.

Incident Response and Attestation

With holistic, multi-protocol sensitive data visibility, organizations know exactly what data is transiting and leaving their environments. If something bad does happen, data in-transit classification provides a complete audit trail of data that was accessed along with who or what accessed it.

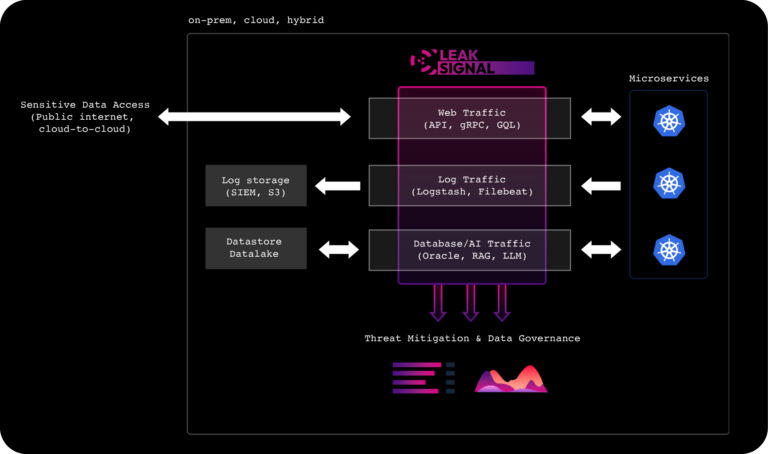

The LeakSignal Approach to Modern Architecture Data Security

LeakSignal has pioneered a groundbreaking approach to data in-transit classification that allows organizations to seamlessly observe data access, properly mitigate threats, and uphold regulatory compliance within production environments. Unlike traditional solutions that retrofit existing tools or rely on unnatural traffic routing, LeakSignal operates natively within cloud-native modern architectures, analyzing encrypted traffic at the service level in real-time to classify all sensitive data flows. This approach leads to significant savings and process streamlining across Platform Engineering, Security Operations, and GRC teams when it comes to understanding how sensitive data is accessed and where it’s headed.

By seamlessly integrating network and data security, LeakSignal empowers organizations to uphold data integrity and compliance across diverse infrastructures. As security operations evolve, LeakSignal stands at the forefront, providing a modern solution to protect organizations most important asset – sensitive data.